NLP: End to End Text Processing for Beginners

Complete Text Processing for Beginners

Everything we express (either verbally or in written) carries huge amounts of information. The topic we choose, our tone, our selection of words, everything adds some type of information that can be interpreted and value can be extracted from it. In theory, we can understand and even predict human behavior using that information.

But there is a problem: one person may generate hundreds or thousands of words in a declaration, each sentence with its corresponding complexity. If you want to scale and analyze several hundreds, thousands, or millions of people or declarations in a given geography, then the situation is unmanageable.

Data generated from conversations, declarations, or even tweets are examples of unstructured data. Unstructured data doesn’t fit neatly into the traditional row and column structure of relational databases, and represent the vast majority of data available in the actual world. It is messy and hard to manipulate. Nevertheless, thanks to the advances in disciplines like machine learning, a big revolution is going on regarding this topic. Nowadays it is no longer about trying to interpret text or speech based on its keywords (the old fashioned mechanical way), but about understanding the meaning behind those words (the cognitive way). This way, it is possible to detect figures of speech like irony or even perform sentiment analysis.

Natural Language Processing or NLP is a field of artificial intelligence that gives the machines the ability to read, understand and derive meaning from human languages.

Ref:

Watch Full Video:

Dataset:

https://www.kaggle.com/kazanova/sentiment140/data#

Installing libraries

SpaCy is an open-source software library that is published and distributed under MIT license, and is developed for performing simple to advanced Natural Language Processing (N.L.P) tasks such as tokenization, part-of-speech tagging, named entity recognition, text classification, calculating semantic similarities between text, lemmatization, and dependency parsing, among others.

# pip install -U spacy # pip install -U spacy-lookups-data # python -m spacy download en_core_web_sm # python -m spacy download en_core_web_md # python -m spacy download en_core_web_lg

In this article, we are going to perform the below tasks.

General Feature Extraction

- File loading

- Word counts

- Characters count

- Average characters per word

- Stop words count

- Count #HashTags and @Mentions

- If numeric digits are present in twitts

- Upper case word counts

Preprocessing and Cleaning

- Lower case

- Contraction to Expansion

- Emails removal and counts

- URLs removal and counts

- Removal of

RT - Removal of Special Characters

- Removal of multiple spaces

- Removal of HTML tags

- Removal of accented characters

- Removal of Stop Words

- Conversion into base form of words

- Common Occuring words Removal

- Rare Occuring words Removal

- Word Cloud

- Spelling Correction

- Tokenization

- Lemmatization

- Detecting Entities using NER

- Noun Detection

- Language Detection

- Sentence Translation

- Using Inbuilt

Sentiment Classifier

Advanced Text Processing and Feature Extraction

- N-Gram, Bi-Gram etc

- Bag of Words (BoW)

- Term Frequency Calculation

TF - Inverse Document Frequency

IDF TFIDFTerm Frequency – Inverse Document Frequency- Word Embedding

Word2Vecusing SpaCy

Machine Learning Models for Text Classification

- SGDClassifier

- LogisticRegression

- LogisticRegressionCV

- LinearSVC

- RandomForestClassifier

Importing libraries

import pandas as pd import numpy as np import spacy from spacy.lang.en.stop_words import STOP_WORDS

df = pd.read_csv('twitter16m.csv', encoding = 'latin1', header = None)

df.head()

| 0 | 1 | 2 | 3 | 4 | 5 | |

|---|---|---|---|---|---|---|

| 0 | 0 | 1467810369 | Mon Apr 06 22:19:45 PDT 2009 | NO_QUERY | _TheSpecialOne_ | @switchfoot http://twitpic.com/2y1zl – Awww, t… |

| 1 | 0 | 1467810672 | Mon Apr 06 22:19:49 PDT 2009 | NO_QUERY | scotthamilton | is upset that he can’t update his Facebook by … |

| 2 | 0 | 1467810917 | Mon Apr 06 22:19:53 PDT 2009 | NO_QUERY | mattycus | @Kenichan I dived many times for the ball. Man… |

| 3 | 0 | 1467811184 | Mon Apr 06 22:19:57 PDT 2009 | NO_QUERY | ElleCTF | my whole body feels itchy and like its on fire |

| 4 | 0 | 1467811193 | Mon Apr 06 22:19:57 PDT 2009 | NO_QUERY | Karoli | @nationwideclass no, it’s not behaving at all…. |

df = df[[5, 0]]

df.columns = ['twitts', 'sentiment'] df.head()

| twitts | sentiment | |

|---|---|---|

| 0 | @switchfoot http://twitpic.com/2y1zl – Awww, t… | 0 |

| 1 | is upset that he can’t update his Facebook by … | 0 |

| 2 | @Kenichan I dived many times for the ball. Man… | 0 |

| 3 | my whole body feels itchy and like its on fire | 0 |

| 4 | @nationwideclass no, it’s not behaving at all…. | 0 |

df['sentiment'].value_counts()

4 800000 0 800000 Name: sentiment, dtype: int64

sent_map = {0: 'negative', 4: 'positive'}

Word Counts

In this step, we are splitting the sentences into words using split() function which converts the sentence into the list and on top of that we are using len() function to calculate the number of token or words.

df['word_counts'] = df['twitts'].apply(lambda x: len(str(x).split()))

df.head()

| twitts | sentiment | word_counts | |

|---|---|---|---|

| 0 | @switchfoot http://twitpic.com/2y1zl – Awww, t… | 0 | 19 |

| 1 | is upset that he can’t update his Facebook by … | 0 | 21 |

| 2 | @Kenichan I dived many times for the ball. Man… | 0 | 18 |

| 3 | my whole body feels itchy and like its on fire | 0 | 10 |

| 4 | @nationwideclass no, it’s not behaving at all…. | 0 | 21 |

Characters Count

In this step, we are using len() function to calculate the number characters inside each sentences.

df['char_counts'] = df['twitts'].apply(lambda x: len(x))

df.head()

| twitts | sentiment | word_counts | char_counts | |

|---|---|---|---|---|

| 0 | @switchfoot http://twitpic.com/2y1zl – Awww, t… | 0 | 19 | 115 |

| 1 | is upset that he can’t update his Facebook by … | 0 | 21 | 111 |

| 2 | @Kenichan I dived many times for the ball. Man… | 0 | 18 | 89 |

| 3 | my whole body feels itchy and like its on fire | 0 | 10 | 47 |

| 4 | @nationwideclass no, it’s not behaving at all…. | 0 | 21 | 111 |

Average Word Length

In this step, we have created a function get_avg_word_len() in which we are calculating the average word length inside each sentences.

def get_avg_word_len(x):

words = x.split()

word_len = 0

for word in words:

word_len = word_len + len(word)

return word_len/len(words) # != len(x)/len(words)

df['avg_word_len'] = df['twitts'].apply(lambda x: get_avg_word_len(x))

len('this is nlp lesson')/4

4.5

df.head()

| twitts | sentiment | word_counts | char_counts | avg_word_len | |

|---|---|---|---|---|---|

| 0 | @switchfoot http://twitpic.com/2y1zl – Awww, t… | 0 | 19 | 115 | 5.052632 |

| 1 | is upset that he can’t update his Facebook by … | 0 | 21 | 111 | 4.285714 |

| 2 | @Kenichan I dived many times for the ball. Man… | 0 | 18 | 89 | 3.944444 |

| 3 | my whole body feels itchy and like its on fire | 0 | 10 | 47 | 3.700000 |

| 4 | @nationwideclass no, it’s not behaving at all…. | 0 | 21 | 111 | 4.285714 |

115/19

6.052631578947368

Stop Words Count

In this section, we are calculating the number of stop words for each sentences.

print(STOP_WORDS)

{'one', 'up', 'further', 'herself', 'nevertheless', 'their', 'when', 'a', 'bottom', 'both', 'also', 'i', 'sometime', 'ours', "'d", 'him', 'together', 'former', 'hereafter', 'whereby', "'ll", 'three', 'same', 'is', 'say', 'hers', 'must', 'five', 'you', 'across', 'n‘t', 'mostly', 'into', 'am', 'myself', 'something', 'could', 'being', 'seems', 'go', 'only', 'fifteen', 'either', 'us', 'than', 'latter', 'so', 'after', 'name', 'there', 'that', 'next', 'even', 'without', 'along', 'behind', 'very', 'whereas', 'off', 'herein', 'although', 'such', 'themselves', 'then', 'in', 'under', 'of', 'onto', 'really', 'due', 'otherwise', 'give', 'yourself', 'indeed', 'my', 'mine', 'show', 'via', 'elsewhere', 'be', 'just', 'thence', 'them', 'beside', 'though', 'as', 'out', 'third', 'however', 'twelve', 'except', '‘d', 'anything', 'move', 'side', 'everything', 'all', 'towards', 'whatever', 'will', 'n’t', 'toward', 'keep', 'hereupon', 'might', 'no', 'own', 'itself', 'for', 'can', 'rather', 'whether', 'while', 'and', 'part', 'over', 'else', 'has', 'forty', 'about', 'hereby', 'sixty', 'using', 'here', 'please', 'often', '’re', 'any', 'ca', 'per', 'whole', 'it', 'are', 'from', 'had', 'thru', '’m', 'two', 'fifty', 'your', 'latterly', 'again', 'or', 'few', 'against', 'much', 'somewhere', 'but', '’d', 'somehow', 'never', 'becoming', 'down', 'regarding', 'always', 'other', 'amount', 'because', 'noone', 'anyone', 'six', 'each', 'thus', 'alone', 'why', 'his', 'sometimes', 'now', 'since', 'become', 'see', 'she', 'where', 'whereafter', 'various', 'perhaps', 'another', 'who', 'anyhow', 'yourselves', 'someone', 'ten', 'became', 'nothing', 'front', 'an', 'anyway', 'get', 'thereafter', "'re", 'our', 'call', 'therein', 'have', 'this', 'above', 'some', 'namely', '‘re', 'seem', 'until', '’ll', 'more', 'still', "n't", 'the', 'does', 'himself', 'take', 'he', 'which', 'seeming', 'been', 'beforehand', 'may', 'do', 'well', 'ever', 'used', 'enough', 'every', 'top', 'made', "'m", 'hundred', 'almost', 'her', 'moreover', 'wherever', '’s', 'amongst', 'meanwhile', 'nobody', 'ourselves', 'whenever', 'at', 'wherein', 'nowhere', 'around', 'between', 'last', 'others', 'becomes', 'they', 'full', 'below', 'nor', 'before', 'what', 'within', 'these', 'besides', 'whereupon', 'how', 'throughout', 'eight', "'s", 'on', 'most', 'if', '‘ve', 'should', 'four', 'serious', 'thereby', '‘ll', 'whence', 'done', 'anywhere', 'yours', 'formerly', 'everyone', 'whose', 'back', 'make', 'among', 'first', 'we', '‘s', 'neither', 'doing', 'already', 'those', 'empty', 'did', 'not', '‘m', 'less', 'to', 'during', 'twenty', 'too', 'put', 'nine', 'yet', 'everywhere', 'quite', 'were', 'seemed', '’ve', 'through', 'once', 'whither', 'thereupon', 'whoever', "'ve", 'therefore', 'me', 'unless', 'whom', 'cannot', 'afterwards', 'none', 'least', 'hence', 'eleven', 'with', 'upon', 'was', 'would', 'by', 'beyond', 'several', 'its', 'many', 're'}

x = 'this is text data'

x.split()

['this', 'is', 'text', 'data']

len([t for t in x.split() if t in STOP_WORDS])

2

df['stop_words_len'] = df['twitts'].apply(lambda x: len([t for t in x.split() if t in STOP_WORDS]))

df.head()

| twitts | sentiment | word_counts | char_counts | avg_word_len | stop_words_len | |

|---|---|---|---|---|---|---|

| 0 | @switchfoot http://twitpic.com/2y1zl – Awww, t… | 0 | 19 | 115 | 5.052632 | 4 |

| 1 | is upset that he can’t update his Facebook by … | 0 | 21 | 111 | 4.285714 | 9 |

| 2 | @Kenichan I dived many times for the ball. Man… | 0 | 18 | 89 | 3.944444 | 7 |

| 3 | my whole body feels itchy and like its on fire | 0 | 10 | 47 | 3.700000 | 5 |

| 4 | @nationwideclass no, it’s not behaving at all…. | 0 | 21 | 111 | 4.285714 | 10 |

Count #HashTags and @Mentions

In this section, we are calculating the number of words staring with Hashtags and @.

x = 'this #hashtag and this is @mention' # x = x.split() # x

[t for t in x.split() if t.startswith('@')]

['@mention']

df['hashtags_count'] = df['twitts'].apply(lambda x: len([t for t in x.split() if t.startswith('#')]))

df['mentions_count'] = df['twitts'].apply(lambda x: len([t for t in x.split() if t.startswith('@')]))

df.head()

| twitts | sentiment | word_counts | char_counts | avg_word_len | stop_words_len | hashtags_count | mentions_count | |

|---|---|---|---|---|---|---|---|---|

| 0 | @switchfoot http://twitpic.com/2y1zl – Awww, t… | 0 | 19 | 115 | 5.052632 | 4 | 0 | 1 |

| 1 | is upset that he can’t update his Facebook by … | 0 | 21 | 111 | 4.285714 | 9 | 0 | 0 |

| 2 | @Kenichan I dived many times for the ball. Man… | 0 | 18 | 89 | 3.944444 | 7 | 0 | 1 |

| 3 | my whole body feels itchy and like its on fire | 0 | 10 | 47 | 3.700000 | 5 | 0 | 0 |

| 4 | @nationwideclass no, it’s not behaving at all…. | 0 | 21 | 111 | 4.285714 | 10 | 0 | 1 |

If numeric digits are present in twitts

In this section, we are calculating the number of digits in each sentences.

df['numerics_count'] = df['twitts'].apply(lambda x: len([t for t in x.split() if t.isdigit()]))

df.head()

| twitts | sentiment | word_counts | char_counts | avg_word_len | stop_words_len | hashtags_count | mentions_count | numerics_count | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | @switchfoot http://twitpic.com/2y1zl – Awww, t… | 0 | 19 | 115 | 5.052632 | 4 | 0 | 1 | 0 |

| 1 | is upset that he can’t update his Facebook by … | 0 | 21 | 111 | 4.285714 | 9 | 0 | 0 | 0 |

| 2 | @Kenichan I dived many times for the ball. Man… | 0 | 18 | 89 | 3.944444 | 7 | 0 | 1 | 0 |

| 3 | my whole body feels itchy and like its on fire | 0 | 10 | 47 | 3.700000 | 5 | 0 | 0 | 0 |

| 4 | @nationwideclass no, it’s not behaving at all…. | 0 | 21 | 111 | 4.285714 | 10 | 0 | 1 | 0 |

UPPER case words count

In this section, we are calculating the number of UPPERcase words in each sentences if length is more than 3.

df['upper_counts'] = df['twitts'].apply(lambda x: len([t for t in x.split() if t.isupper() and len(x)>3]))

df.head()

| twitts | sentiment | word_counts | char_counts | avg_word_len | stop_words_len | hashtags_count | mentions_count | numerics_count | upper_counts | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | @switchfoot http://twitpic.com/2y1zl – Awww, t… | 0 | 19 | 115 | 5.052632 | 4 | 0 | 1 | 0 | 1 |

| 1 | is upset that he can’t update his Facebook by … | 0 | 21 | 111 | 4.285714 | 9 | 0 | 0 | 0 | 0 |

| 2 | @Kenichan I dived many times for the ball. Man… | 0 | 18 | 89 | 3.944444 | 7 | 0 | 1 | 0 | 1 |

| 3 | my whole body feels itchy and like its on fire | 0 | 10 | 47 | 3.700000 | 5 | 0 | 0 | 0 | 0 |

| 4 | @nationwideclass no, it’s not behaving at all…. | 0 | 21 | 111 | 4.285714 | 10 | 0 | 1 | 0 | 1 |

df.loc[96]['twitts']

"so rylee,grace...wana go steve's party or not?? SADLY SINCE ITS EASTER I WNT B ABLE 2 DO MUCH BUT OHH WELL....."

Preprocessing and Cleaning

In this section, we are converting the words to LOWERcase words in each sentences.

Lower case conversion

df['twitts'] = df['twitts'].apply(lambda x: x.lower())

df.head(2)

| twitts | sentiment | word_counts | char_counts | avg_word_len | stop_words_len | hashtags_count | mentions_count | numerics_count | upper_counts | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | @switchfoot http://twitpic.com/2y1zl – awww, t… | 0 | 19 | 115 | 5.052632 | 4 | 0 | 1 | 0 | 1 |

| 1 | is upset that he can’t update his facebook by … | 0 | 21 | 111 | 4.285714 | 9 | 0 | 0 | 0 | 0 |

Contraction to Expansion

In this section, we are converting all short words to their respective fullwords based on the words defined in the dictionary and using function cont_to_exp().

x = "i don't know what you want, can't, he'll, i'd"

contractions = {

"ain't": "am not",

"aren't": "are not",

"can't": "cannot",

"can't've": "cannot have",

"'cause": "because",

"could've": "could have",

"couldn't": "could not",

"couldn't've": "could not have",

"didn't": "did not",

"doesn't": "does not",

"don't": "do not",

"hadn't": "had not",

"hadn't've": "had not have",

"hasn't": "has not",

"haven't": "have not",

"he'd": "he would",

"he'd've": "he would have",

"he'll": "he will",

"he'll've": "he will have",

"he's": "he is",

"how'd": "how did",

"how'd'y": "how do you",

"how'll": "how will",

"how's": "how does",

"i'd": "i would",

"i'd've": "i would have",

"i'll": "i will",

"i'll've": "i will have",

"i'm": "i am",

"i've": "i have",

"isn't": "is not",

"it'd": "it would",

"it'd've": "it would have",

"it'll": "it will",

"it'll've": "it will have",

"it's": "it is",

"let's": "let us",

"ma'am": "madam",

"mayn't": "may not",

"might've": "might have",

"mightn't": "might not",

"mightn't've": "might not have",

"must've": "must have",

"mustn't": "must not",

"mustn't've": "must not have",

"needn't": "need not",

"needn't've": "need not have",

"o'clock": "of the clock",

"oughtn't": "ought not",

"oughtn't've": "ought not have",

"shan't": "shall not",

"sha'n't": "shall not",

"shan't've": "shall not have",

"she'd": "she would",

"she'd've": "she would have",

"she'll": "she will",

"she'll've": "she will have",

"she's": "she is",

"should've": "should have",

"shouldn't": "should not",

"shouldn't've": "should not have",

"so've": "so have",

"so's": "so is",

"that'd": "that would",

"that'd've": "that would have",

"that's": "that is",

"there'd": "there would",

"there'd've": "there would have",

"there's": "there is",

"they'd": "they would",

"they'd've": "they would have",

"they'll": "they will",

"they'll've": "they will have",

"they're": "they are",

"they've": "they have",

"to've": "to have",

"wasn't": "was not",

" u ": " you ",

" ur ": " your ",

" n ": " and "}

def cont_to_exp(x):

if type(x) is str:

for key in contractions:

value = contractions[key]

x = x.replace(key, value)

return x

else:

return x

x = "hi, i'd be happy"

cont_to_exp(x)

'hi, i would be happy'

%%time df['twitts'] = df['twitts'].apply(lambda x: cont_to_exp(x))

Wall time: 52.7 s

df.head()

| twitts | sentiment | word_counts | char_counts | avg_word_len | stop_words_len | hashtags_count | mentions_count | numerics_count | upper_counts | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | @switchfoot http://twitpic.com/2y1zl – awww, t… | 0 | 19 | 115 | 5.052632 | 4 | 0 | 1 | 0 | 1 |

| 1 | is upset that he cannot update his facebook by… | 0 | 21 | 111 | 4.285714 | 9 | 0 | 0 | 0 | 0 |

| 2 | @kenichan i dived many times for the ball. man… | 0 | 18 | 89 | 3.944444 | 7 | 0 | 1 | 0 | 1 |

| 3 | my whole body feels itchy and like its on fire | 0 | 10 | 47 | 3.700000 | 5 | 0 | 0 | 0 | 0 |

| 4 | @nationwideclass no, it is not behaving at all… | 0 | 21 | 111 | 4.285714 | 10 | 0 | 1 | 0 | 1 |

Count and Remove Emails

In this section, we are removing as well as counting the emails.

import re

x = 'hi my email me at email@email.com another@email.com'

re.findall(r'([a-zA-Z0-9+._-]+@[a-zA-Z0-9._-]+\.[a-zA-Z0-9_-]+)', x)

['email@email.com', 'another@email.com']

df['emails'] = df['twitts'].apply(lambda x: re.findall(r'([a-zA-Z0-9+._-]+@[a-zA-Z0-9._-]+\.[a-zA-Z0-9_-]+)', x))

df['emails_count'] = df['emails'].apply(lambda x: len(x))

df[df['emails_count']>0].head()

| twitts | sentiment | word_counts | char_counts | avg_word_len | stop_words_len | hashtags_count | mentions_count | numerics_count | upper_counts | emails | emails_count | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 4054 | i want a new laptop. hp tx2000 is the bomb. :… | 0 | 20 | 103 | 4.150000 | 6 | 0 | 0 | 0 | 4 | [gabbehhramos@yahoo.com] | 1 |

| 7917 | who stole elledell@gmail.com? | 0 | 3 | 31 | 9.000000 | 1 | 0 | 0 | 0 | 0 | [elledell@gmail.com] | 1 |

| 8496 | @alexistehpom really? did you send out all th… | 0 | 20 | 130 | 5.500000 | 11 | 0 | 1 | 0 | 0 | [missataari@gmail.com] | 1 |

| 10290 | @laureystack awh…that is kinda sad lol add … | 0 | 8 | 76 | 8.500000 | 0 | 0 | 1 | 0 | 0 | [hello.kitty.65@hotmail.com] | 1 |

| 16413 | @jilliancyork got 2 bottom of it, human error… | 0 | 21 | 137 | 5.428571 | 7 | 0 | 1 | 1 | 0 | [press@linkedin.com] | 1 |

re.sub(r'([a-zA-Z0-9+._-]+@[a-zA-Z0-9._-]+\.[a-zA-Z0-9_-]+)', '', x)

'hi my email me at '

df['twitts'] = df['twitts'].apply(lambda x: re.sub(r'([a-zA-Z0-9+._-]+@[a-zA-Z0-9._-]+\.[a-zA-Z0-9_-]+)', '', x))

df[df['emails_count']>0].head()

| twitts | sentiment | word_counts | char_counts | avg_word_len | stop_words_len | hashtags_count | mentions_count | numerics_count | upper_counts | emails | emails_count | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 4054 | i want a new laptop. hp tx2000 is the bomb. :… | 0 | 20 | 103 | 4.150000 | 6 | 0 | 0 | 0 | 4 | [gabbehhramos@yahoo.com] | 1 |

| 7917 | who stole ? | 0 | 3 | 31 | 9.000000 | 1 | 0 | 0 | 0 | 0 | [elledell@gmail.com] | 1 |

| 8496 | @alexistehpom really? did you send out all th… | 0 | 20 | 130 | 5.500000 | 11 | 0 | 1 | 0 | 0 | [missataari@gmail.com] | 1 |

| 10290 | @laureystack awh…that is kinda sad lol add … | 0 | 8 | 76 | 8.500000 | 0 | 0 | 1 | 0 | 0 | [hello.kitty.65@hotmail.com] | 1 |

| 16413 | @jilliancyork got 2 bottom of it, human error… | 0 | 21 | 137 | 5.428571 | 7 | 0 | 1 | 1 | 0 | [press@linkedin.com] | 1 |

Count URLs and Remove it

In this section, we are removing as well as counting the URLs using regex functions.

x = 'hi, to watch more visit https://youtube.com/kgptalkie'

re.findall(r'(http|ftp|https)://([\w_-]+(?:(?:\.[\w_-]+)+))([\w.,@?^=%&:/~+#-]*[\w@?^=%&/~+#-])?', x)

[('https', 'youtube.com', '/kgptalkie')]df['urls_flag'] = df['twitts'].apply(lambda x: len(re.findall(r'(http|ftp|https)://([\w_-]+(?:(?:\.[\w_-]+)+))([\w.,@?^=%&:/~+#-]*[\w@?^=%&/~+#-])?', x)))

re.sub(r'(http|ftp|https)://([\w_-]+(?:(?:\.[\w_-]+)+))([\w.,@?^=%&:/~+#-]*[\w@?^=%&/~+#-])?', '', x)

'hi, to watch more visit '

df['twitts'] = df['twitts'].apply(lambda x: re.sub(r'(http|ftp|https)://([\w_-]+(?:(?:\.[\w_-]+)+))([\w.,@?^=%&:/~+#-]*[\w@?^=%&/~+#-])?', '', x))

df.head()

| twitts | sentiment | word_counts | char_counts | avg_word_len | stop_words_len | hashtags_count | mentions_count | numerics_count | upper_counts | emails | emails_count | urls_flag | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | @switchfoot – awww, that is a bummer. you sh… | 0 | 19 | 115 | 5.052632 | 4 | 0 | 1 | 0 | 1 | [] | 0 | 1 |

| 1 | is upset that he cannot update his facebook by… | 0 | 21 | 111 | 4.285714 | 9 | 0 | 0 | 0 | 0 | [] | 0 | 0 |

| 2 | @kenichan i dived many times for the ball. man… | 0 | 18 | 89 | 3.944444 | 7 | 0 | 1 | 0 | 1 | [] | 0 | 0 |

| 3 | my whole body feels itchy and like its on fire | 0 | 10 | 47 | 3.700000 | 5 | 0 | 0 | 0 | 0 | [] | 0 | 0 |

| 4 | @nationwideclass no, it is not behaving at all… | 0 | 21 | 111 | 4.285714 | 10 | 0 | 1 | 0 | 1 | [] | 0 | 0 |

df.loc[0]['twitts']

'@switchfoot - awww, that is a bummer. you shoulda got david carr of third day to do it. ;d'

Remove RT

In this section, we are removing retweet characters.

df['twitts'] = df['twitts'].apply(lambda x: re.sub('RT', "", x))

Special Chars removal or punctuation removal

In this section, we are removing special characters and punctuations

df['twitts'] = df['twitts'].apply(lambda x: re.sub('[^A-Z a-z 0-9-]+', '', x))

df.head()

| twitts | sentiment | word_counts | char_counts | avg_word_len | stop_words_len | hashtags_count | mentions_count | numerics_count | upper_counts | emails | emails_count | urls_flag | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | switchfoot – awww that is a bummer you shoul… | 0 | 19 | 115 | 5.052632 | 4 | 0 | 1 | 0 | 1 | [] | 0 | 1 |

| 1 | is upset that he cannot update his facebook by… | 0 | 21 | 111 | 4.285714 | 9 | 0 | 0 | 0 | 0 | [] | 0 | 0 |

| 2 | kenichan i dived many times for the ball manag… | 0 | 18 | 89 | 3.944444 | 7 | 0 | 1 | 0 | 1 | [] | 0 | 0 |

| 3 | my whole body feels itchy and like its on fire | 0 | 10 | 47 | 3.700000 | 5 | 0 | 0 | 0 | 0 | [] | 0 | 0 |

| 4 | nationwideclass no it is not behaving at all i… | 0 | 21 | 111 | 4.285714 | 10 | 0 | 1 | 0 | 1 | [] | 0 | 0 |

Remove multiple spaces "hi hello "

In this section, we are removing the multiple spaces.

x = 'thanks for watching and please like this video'

" ".join(x.split())

'thanks for watching and please like this video'

df['twitts'] = df['twitts'].apply(lambda x: " ".join(x.split()))

df.head(2)

| twitts | sentiment | word_counts | char_counts | avg_word_len | stop_words_len | hashtags_count | mentions_count | numerics_count | upper_counts | emails | emails_count | urls_flag | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | switchfoot – awww that is a bummer you shoulda… | 0 | 19 | 115 | 5.052632 | 4 | 0 | 1 | 0 | 1 | [] | 0 | 1 |

| 1 | is upset that he cannot update his facebook by… | 0 | 21 | 111 | 4.285714 | 9 | 0 | 0 | 0 | 0 | [] | 0 | 0 |

Remove HTML tags

In this section, we are removing the HTML tags.

from bs4 import BeautifulSoup

x = '<html><h2>Thanks for watching</h2></html>'

BeautifulSoup(x, 'lxml').get_text()

'Thanks for watching'

%%time df['twitts'] = df['twitts'].apply(lambda x: BeautifulSoup(x, 'lxml').get_text())

Wall time: 11min 37s

Remove Accented Chars

In this section, we are removing the accented characters.

import unicodedata

x = 'Áccěntěd těxt'

def remove_accented_chars(x):

x = unicodedata.normalize('NFKD', x).encode('ascii', 'ignore').decode('utf-8', 'ignore')

return x

remove_accented_chars(x)

'Accented text'

SpaCy and NLP

Remove Stop Words

In this section, we are removing the stop words from text document.

import spacy

x = 'this is stop words removal code is a the an how what'

" ".join([t for t in x.split() if t not in STOP_WORDS])

'stop words removal code'

df['twitts'] = df['twitts'].apply(lambda x: " ".join([t for t in x.split() if t not in STOP_WORDS]))

df.head()

| twitts | sentiment | word_counts | char_counts | avg_word_len | stop_words_len | hashtags_count | mentions_count | numerics_count | upper_counts | emails | emails_count | urls_flag | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | switchfoot – awww bummer shoulda got david car… | 0 | 19 | 115 | 5.052632 | 4 | 0 | 1 | 0 | 1 | [] | 0 | 1 |

| 1 | upset update facebook texting cry result schoo… | 0 | 21 | 111 | 4.285714 | 9 | 0 | 0 | 0 | 0 | [] | 0 | 0 |

| 2 | kenichan dived times ball managed save 50 rest… | 0 | 18 | 89 | 3.944444 | 7 | 0 | 1 | 0 | 1 | [] | 0 | 0 |

| 3 | body feels itchy like fire | 0 | 10 | 47 | 3.700000 | 5 | 0 | 0 | 0 | 0 | [] | 0 | 0 |

| 4 | nationwideclass behaving mad | 0 | 21 | 111 | 4.285714 | 10 | 0 | 1 | 0 | 1 | [] | 0 | 0 |

Convert into base or root form of word

In this section, we are converting the words to their forms.

nlp = spacy.load('en_core_web_sm')

x = 'kenichan dived times ball managed save 50 rest'

# dive = dived, time = times, manage = managed

# x = 'i you he she they is am are'

def make_to_base(x):

x_list = []

doc = nlp(x)

for token in doc:

lemma = str(token.lemma_)

if lemma == '-PRON-' or lemma == 'be':

lemma = token.text

x_list.append(lemma)

print(" ".join(x_list))

make_to_base(x)

kenichan dive time ball manage save 50 rest

Common words removal

In this section, we are removing top 20 most occured word from text corpus.

' '.join(df.head()['twitts'])

'switchfoot - awww bummer shoulda got david carr day d upset update facebook texting cry result school today blah kenichan dived times ball managed save 50 rest bounds body feels itchy like fire nationwideclass behaving mad'

text = ' '.join(df['twitts'])

text = text.split()

freq_comm = pd.Series(text).value_counts()

f20 = freq_comm[:20]

f20

good 89366 day 82299 like 77735 - 69662 today 64512 going 64078 love 63421 work 62804 got 60749 time 56081 lol 55094 know 51172 im 50147 want 42070 new 41995 think 41040 night 41029 amp 40616 thanks 39311 home 39168 dtype: int64

df['twitts'] = df['twitts'].apply(lambda x: " ".join([t for t in x.split() if t not in f20]))

Rare words removal

In this section, we are removing least 20 most occured word from text corpus.

rare20 = freq_comm[-20:]

rare20

veru 1 80-90f 1 refrigerant 1 demaisss 1 knittingsci-fi 1 wendireed 1 danielletuazon 1 chacha8 1 a-zquot 1 krustythecat 1 westmount 1 -appreciate 1 motocycle 1 madamhow 1 felspoon 1 fastbloke 1 900pmno 1 nxec 1 laassssttt 1 update-uri 1 dtype: int64

rare = freq_comm[freq_comm.values == 1]

rare

mamat 1

fiive 1

music-festival 1

leenahyena 1

11517 1

..

fastbloke 1

900pmno 1

nxec 1

laassssttt 1

update-uri 1

Length: 536196, dtype: int64df['twitts'] = df['twitts'].apply(lambda x: ' '.join([t for t in x.split() if t not in rare20]))

df.head()

| twitts | sentiment | word_counts | char_counts | avg_word_len | stop_words_len | hashtags_count | mentions_count | numerics_count | upper_counts | emails | emails_count | urls_flag | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | switchfoot awww bummer shoulda david carr d | 0 | 19 | 115 | 5.052632 | 4 | 0 | 1 | 0 | 1 | [] | 0 | 1 |

| 1 | upset update facebook texting cry result schoo… | 0 | 21 | 111 | 4.285714 | 9 | 0 | 0 | 0 | 0 | [] | 0 | 0 |

| 2 | kenichan dived times ball managed save 50 rest… | 0 | 18 | 89 | 3.944444 | 7 | 0 | 1 | 0 | 1 | [] | 0 | 0 |

| 3 | body feels itchy fire | 0 | 10 | 47 | 3.700000 | 5 | 0 | 0 | 0 | 0 | [] | 0 | 0 |

| 4 | nationwideclass behaving mad | 0 | 21 | 111 | 4.285714 | 10 | 0 | 1 | 0 | 1 | [] | 0 | 0 |

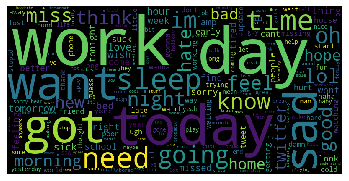

Word Cloud Visualization

In this section, we are visualizing the text corpus using library WordCloud.

# !pip install wordcloud

from wordcloud import WordCloud import matplotlib.pyplot as plt %matplotlib inline

x = ' '.join(text[:20000])

len(text)

10837079

wc = WordCloud(width = 800, height=400).generate(x)

plt.imshow(wc)

plt.axis('off')

plt.show()

Spelling Correction

In this section, we are correcting the spelling of each words.

# !pip install -U textblob # !python -m textblob.download_corpora

from textblob import TextBlob

x = 'tanks forr waching this vidio carri'

x = TextBlob(x).correct()

x

TextBlob("tanks for watching this video carry")Tokenization

Tokenization is all about breaking the sentences into individual words.

x = 'thanks#watching this video. please like it'

TextBlob(x).words

WordList(['thanks', 'watching', 'this', 'video', 'please', 'like', 'it'])

doc = nlp(x)

for token in doc:

print(token)

thanks#watching this video . please like it

Lemmatization

Lemmatization is the process of grouping together the different inflected forms of a word so they can be analysed as a single item. Lemmatization is similar to stemming but it brings context to the words. So it links words with similar meaning to one word.

x = 'runs run running ran'

from textblob import Word

for token in x.split():

print(Word(token).lemmatize())

run run running ran

doc = nlp(x)

for token in doc:

print(token.lemma_)

run run run run

Detect Entities using NER of SpaCy

Named Entity Recognition (NER) is a standard NLP problem which involves spotting named entities (people, places, organizations etc.) from a chunk of text, and classifying them into a predefined set of categories. Some of the practical applications of NER include:

- Scanning news articles for the people, organizations and locations reported.

- Providing concise features for search optimization: instead of searching the entire content, one may simply search for the major entities involved.

- Quickly retrieving geographical locations talked about in Twitter posts.

x = "Breaking News: Donald Trump, the president of the USA is looking to sign a deal to mine the moon"

doc = nlp(x)

for ent in doc.ents:

print(ent.text + ' - ' + ent.label_ + ' - ' + str(spacy.explain(ent.label_)))

Donald Trump - PERSON - People, including fictional USA - GPE - Countries, cities, states

from spacy import displacy

displacy.render(doc, style = 'ent')

Breaking News: Donald Trump PERSON , the president of the USA GPE is looking to sign a deal to mine the moon

Detecting Nouns

In this section, we are detecting nouns.

x

'Breaking News: Donald Trump, the president of the USA is looking to sign a deal to mine the moon'

for noun in doc.noun_chunks:

print(noun)

Breaking News Donald Trump the president the USA a deal the moon

Translation and Language Detection

Language Code: https://www.loc.gov/standards/iso639-2/php/code_list.php

x

'Breaking News: Donald Trump, the president of the USA is looking to sign a deal to mine the moon'

tb = TextBlob(x)

tb.detect_language()

'en'

tb.translate(to='bn')

TextBlob("ব্রেকিং নিউজ: যুক্তরাষ্ট্রের রাষ্ট্রপতি ডোনাল্ড ট্রাম্প চাঁদটি খনির জন্য একটি চুক্তিতে সই করতে চাইছেন")Use inbuilt sentiment classifier

TextBlob library also comes with a NaiveBayesAnalyzer, Naive Bayes is a commonly used machine learning text-classification algorithm.

from textblob.sentiments import NaiveBayesAnalyzer

x = 'we all stands together to fight with corona virus. we will win together'

tb = TextBlob(x, analyzer=NaiveBayesAnalyzer())

tb.sentiment

Sentiment(classification='pos', p_pos=0.8259779151942094, p_neg=0.17402208480578962)

x = 'we all are sufering from corona'

tb = TextBlob(x, analyzer=NaiveBayesAnalyzer())

tb.sentiment

Sentiment(classification='pos', p_pos=0.75616044472398, p_neg=0.2438395552760203)

Advanced Text Processing

N-Grams

An N-gram means a sequence of N words. So for example, “KGPtalkie blog” is a 2-gram (a bigram), “A KGPtalkie blog post” is a 4-gram, and “Write on KGPtalkie” is a 3-gram (trigram). Well, that wasn’t very interesting or exciting. True, but we still have to look at the probability used with n-grams, which is quite interesting.

x = 'thanks for watching'

tb = TextBlob(x)

tb.ngrams(3)

[WordList(['thanks', 'for', 'watching'])]

Bag of Words BoW

In this section, we are going to discuss a Natural Language Processing technique of text modeling known as the Bag of Words model. Whenever we apply any algorithm in NLP, it works on numbers. We cannot directly feed our text into that algorithm. Hence, the Bag of Words model is used to preprocess the text by converting it into a bag of words, which keeps a count of the total occurrences of most frequently used words.

This model can be visualized using a table, which contains the count of words corresponding to the word itself.

x = ['this is first sentence this is', 'this is second', 'this is last']

from sklearn.feature_extraction.text import CountVectorizer

cv = CountVectorizer(ngram_range=(1,1)) text_counts = cv.fit_transform(x)

text_counts.toarray()

array([[1, 2, 0, 0, 1, 2],

[0, 1, 0, 1, 0, 1],

[0, 1, 1, 0, 0, 1]], dtype=int64)cv.get_feature_names()

['first', 'is', 'last', 'second', 'sentence', 'this']

bow = pd.DataFrame(text_counts.toarray(), columns = cv.get_feature_names())

bow

| first | is | last | second | sentence | this | |

|---|---|---|---|---|---|---|

| 0 | 1 | 2 | 0 | 0 | 1 | 2 |

| 1 | 0 | 1 | 0 | 1 | 0 | 1 |

| 2 | 0 | 1 | 1 | 0 | 0 | 1 |

x

['this is first sentence this is', 'this is second', 'this is last']

Term Frequency

Term frequency (TF) often used in Text Mining, NLP, and Information Retrieval tells you how frequently a term occurs in a document. In the context of natural language, terms correspond to words or phrases. Since every document is different in length, it is possible that a term would appear more often in longer documents than shorter ones. Thus, term frequency is often divided by the total number of terms in the document as a way of normalization.

TF(t) = (Number of times term t appears in a document) / (Total number of terms in the document).

x

['this is first sentence this is', 'this is second', 'this is last']

bow

| first | is | last | second | sentence | this | |

|---|---|---|---|---|---|---|

| 0 | 1 | 2 | 0 | 0 | 1 | 2 |

| 1 | 0 | 1 | 0 | 1 | 0 | 1 |

| 2 | 0 | 1 | 1 | 0 | 0 | 1 |

bow.shape

(3, 6)

tf = bow.copy()

for index, row in enumerate(tf.iterrows()):

for col in row[1].index:

tf.loc[index, col] = tf.loc[index, col]/sum(row[1].values)

tf

| first | is | last | second | sentence | this | |

|---|---|---|---|---|---|---|

| 0 | 0.166667 | 0.333333 | 0.000000 | 0.000000 | 0.166667 | 0.333333 |

| 1 | 0.000000 | 0.333333 | 0.000000 | 0.333333 | 0.000000 | 0.333333 |

| 2 | 0.000000 | 0.333333 | 0.333333 | 0.000000 | 0.000000 | 0.333333 |

Inverse Document Frequency IDF

Inverse Document Frequency (IDF) is a weight indicating how commonly a word is used. The more frequent its usage across documents, the lower its score. The lower the score, the less important the word becomes.

For example, the word the appears in almost all English texts and would thus have a very low IDF score as it carries very little “topic” information. In contrast, if you take the word coffee, while it is common, it’s not used as widely as the word the. Thus, coffee would have a higher IDF score than the.

idf = log( (1 + N)/(n + 1)) + 1 used in sklearn when smooth_idf = True

where, N is the total number of rows and n is the number of rows in which the word was present.

import numpy as np

x_df = pd.DataFrame(x, columns=['words'])

x_df

| words | |

|---|---|

| 0 | this is first sentence this is |

| 1 | this is second |

| 2 | this is last |

bow

| first | is | last | second | sentence | this | |

|---|---|---|---|---|---|---|

| 0 | 1 | 2 | 0 | 0 | 1 | 2 |

| 1 | 0 | 1 | 0 | 1 | 0 | 1 |

| 2 | 0 | 1 | 1 | 0 | 0 | 1 |

N = bow.shape[0] N

3

bb = bow.astype('bool')

bb

| first | is | last | second | sentence | this | |

|---|---|---|---|---|---|---|

| 0 | True | True | False | False | True | True |

| 1 | False | True | False | True | False | True |

| 2 | False | True | True | False | False | True |

bb['is'].sum()

3

cols = bb.columns cols

Index(['first', 'is', 'last', 'second', 'sentence', 'this'], dtype='object')

nz = []

for col in cols:

nz.append(bb[col].sum())

nz

[1, 3, 1, 1, 1, 3]

idf = []

for index, col in enumerate(cols):

idf.append(np.log((N + 1)/(nz[index] + 1)) + 1)

idf

[1.6931471805599454, 1.0, 1.6931471805599454, 1.6931471805599454, 1.6931471805599454, 1.0]

bow

| first | is | last | second | sentence | this | |

|---|---|---|---|---|---|---|

| 0 | 1 | 2 | 0 | 0 | 1 | 2 |

| 1 | 0 | 1 | 0 | 1 | 0 | 1 |

| 2 | 0 | 1 | 1 | 0 | 0 | 1 |

TFIDF

TF-IDF which stands for Term Frequency – Inverse Document Frequency. It is one of the most important techniques used for information retrieval to represent how important a specific word or phrase is to a given document. Let’s take an example, we have a string or Bag of Words (BOW) and we have to extract information from it, then we can use this approach.

The tf-idf value increases in proportion to the number of times a word appears in the document but is often offset by the frequency of the word in the corpus, which helps to adjust with respect to the fact that some words appear more frequently in general.

TF-IDF use two statistical methods, first is Term Frequency and the other is Inverse Document Frequency. Term frequency refers to the total number of times a given term t appears in the document doc against (per) the total number of all words in the document and The inverse document frequency measure of how much information the word provides. It measures the weight of a given word in the entire document. IDF show how common or rare a given word is across all documents.

from sklearn.feature_extraction.text import TfidfVectorizer

tfidf = TfidfVectorizer() x_tfidf = tfidf.fit_transform(x_df['words'])

x_tfidf.toarray()

array([[0.45688214, 0.5396839 , 0. , 0. , 0.45688214,

0.5396839 ],

[0. , 0.45329466, 0. , 0.76749457, 0. ,

0.45329466],

[0. , 0.45329466, 0.76749457, 0. , 0. ,

0.45329466]])tfidf.idf_

array([1.69314718, 1. , 1.69314718, 1.69314718, 1.69314718,

1. ])idf

[1.6931471805599454, 1.0, 1.6931471805599454, 1.6931471805599454, 1.6931471805599454, 1.0]

Word Embeddings

Word Embedding is a language modeling technique used for mapping words to vectors of real numbers. It represents words or phrases in vector space with several dimensions. Word embeddings can be generated using various methods like neural networks, co-occurrence matrix, probabilistic models, etc.

SpaCy Word2Vec

# !python -m spacy download en_core_web_lg

nlp = spacy.load('en_core_web_lg')

doc = nlp('thank you! dog cat lion dfasaa')

for token in doc:

print(token.text, token.has_vector)

thank True you True ! True dog True cat True lion True dfasaa False

token.vector.shape

(300,)

nlp('cat').vector.shape

(300,)

for token1 in doc:

for token2 in doc:

print(token1.text, token2.text, token1.similarity(token2))

print()

thank thank 1.0 thank you 0.5647585 thank ! 0.52147406 thank dog 0.2504265 thank cat 0.20648485 thank lion 0.13629764

C:\ProgramData\Anaconda3\lib\runpy.py:193: UserWarning: [W008] Evaluating Token.similarity based on empty vectors. "__main__", mod_spec)

thank dfasaa 0.0 you thank 0.5647585 you you 1.0 you ! 0.4390223 you dog 0.36494097 you cat 0.3080798 you lion 0.20392051

C:\ProgramData\Anaconda3\lib\runpy.py:193: UserWarning: [W008] Evaluating Token.similarity based on empty vectors. "__main__", mod_spec)

you dfasaa 0.0 ! thank 0.52147406 ! you 0.4390223 ! ! 1.0 ! dog 0.29852203 ! cat 0.29702348 ! lion 0.19601382

C:\ProgramData\Anaconda3\lib\runpy.py:193: UserWarning: [W008] Evaluating Token.similarity based on empty vectors. "__main__", mod_spec)

! dfasaa 0.0 dog thank 0.2504265 dog you 0.36494097 dog ! 0.29852203 dog dog 1.0 dog cat 0.80168545 dog lion 0.47424486

C:\ProgramData\Anaconda3\lib\runpy.py:193: UserWarning: [W008] Evaluating Token.similarity based on empty vectors. "__main__", mod_spec)

dog dfasaa 0.0 cat thank 0.20648485 cat you 0.3080798 cat ! 0.29702348 cat dog 0.80168545 cat cat 1.0 cat lion 0.52654374

C:\ProgramData\Anaconda3\lib\runpy.py:193: UserWarning: [W008] Evaluating Token.similarity based on empty vectors. "__main__", mod_spec)

cat dfasaa 0.0 lion thank 0.13629764 lion you 0.20392051 lion ! 0.19601382 lion dog 0.47424486 lion cat 0.52654374 lion lion 1.0

C:\ProgramData\Anaconda3\lib\runpy.py:193: UserWarning: [W008] Evaluating Token.similarity based on empty vectors. "__main__", mod_spec)

lion dfasaa 0.0

C:\ProgramData\Anaconda3\lib\runpy.py:193: UserWarning: [W008] Evaluating Token.similarity based on empty vectors. "__main__", mod_spec)

dfasaa thank 0.0

C:\ProgramData\Anaconda3\lib\runpy.py:193: UserWarning: [W008] Evaluating Token.similarity based on empty vectors. "__main__", mod_spec)

dfasaa you 0.0

C:\ProgramData\Anaconda3\lib\runpy.py:193: UserWarning: [W008] Evaluating Token.similarity based on empty vectors. "__main__", mod_spec)

dfasaa ! 0.0

C:\ProgramData\Anaconda3\lib\runpy.py:193: UserWarning: [W008] Evaluating Token.similarity based on empty vectors. "__main__", mod_spec)

dfasaa dog 0.0

C:\ProgramData\Anaconda3\lib\runpy.py:193: UserWarning: [W008] Evaluating Token.similarity based on empty vectors. "__main__", mod_spec)

dfasaa cat 0.0

C:\ProgramData\Anaconda3\lib\runpy.py:193: UserWarning: [W008] Evaluating Token.similarity based on empty vectors. "__main__", mod_spec)

dfasaa lion 0.0 dfasaa dfasaa 1.0

Machine Learning Models for Text Classification

BoW

#displaying the shape of the dimension df.shape

(1600000, 13)

#sampling the number of rows df0 = df[df['sentiment']==0].sample(2000) df4 = df[df['sentiment']==4].sample(2000)

dfr = df0.append(df4)

dfr.shape

(4000, 13)

#removing the twitts,sentiment and emails columns dfr_feat = dfr.drop(labels=['twitts','sentiment','emails'], axis = 1).reset_index(drop=True)

dfr_feat

| word_counts | char_counts | avg_word_len | stop_words_len | hashtags_count | mentions_count | numerics_count | upper_counts | emails_count | urls_flag | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 15 | 81 | 4.400000 | 6 | 0 | 0 | 0 | 0 | 0 | 0 |

| 1 | 8 | 47 | 4.875000 | 4 | 0 | 1 | 0 | 0 | 0 | 0 |

| 2 | 15 | 69 | 3.600000 | 6 | 0 | 1 | 0 | 0 | 0 | 0 |

| 3 | 9 | 42 | 3.666667 | 4 | 0 | 0 | 0 | 2 | 0 | 0 |

| 4 | 14 | 77 | 4.500000 | 5 | 0 | 0 | 0 | 0 | 0 | 0 |

| … | … | … | … | … | … | … | … | … | … | … |

| 3995 | 3 | 33 | 9.666667 | 1 | 0 | 1 | 0 | 0 | 0 | 0 |

| 3996 | 16 | 78 | 3.875000 | 4 | 0 | 1 | 0 | 2 | 0 | 0 |

| 3997 | 27 | 134 | 3.962963 | 9 | 0 | 1 | 0 | 2 | 0 | 0 |

| 3998 | 6 | 44 | 6.333333 | 0 | 0 | 1 | 1 | 1 | 0 | 0 |

| 3999 | 5 | 25 | 4.000000 | 3 | 0 | 1 | 0 | 0 | 0 | 0 |

4000 rows × 10 columns

y = dfr['sentiment']

from sklearn.feature_extraction.text import CountVectorizer

cv = CountVectorizer() text_counts = cv.fit_transform(dfr['twitts'])

text_counts.toarray().shape

(4000, 9750)

dfr_bow = pd.DataFrame(text_counts.toarray(), columns=cv.get_feature_names())

dfr_bow.head(2)

| 007peter | 05 | 060594 | 09 | 10 | 100 | 1000 | 10000000000000000000000000000 | 1038 | 1041 | … | zomg | zonked | zoo | zooey | zrovna | zshare | zsk | zwel | zzz | zzzzz | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | … | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | … | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

2 rows × 9750 columns

ML Algorithms

Importing Libraries for ML algorithms

from sklearn.linear_model import SGDClassifier from sklearn.linear_model import LogisticRegression from sklearn.linear_model import LogisticRegressionCV from sklearn.svm import LinearSVC from sklearn.ensemble import RandomForestClassifier from sklearn.model_selection import train_test_split from sklearn.metrics import confusion_matrix, accuracy_score from sklearn.preprocessing import MinMaxScaler

sgd = SGDClassifier(n_jobs=-1, random_state=42, max_iter=200) lgr = LogisticRegression(random_state=42, max_iter=200) lgrcv = LogisticRegressionCV(cv = 2, random_state=42, max_iter=1000) svm = LinearSVC(random_state=42, max_iter=200) rfc = RandomForestClassifier(random_state=42, n_jobs=-1, n_estimators=200)

clf = {'SGD': sgd, 'LGR': lgr, 'LGR-CV': lgrcv, 'SVM': svm, 'RFC': rfc}

clf.keys()

dict_keys(['SGD', 'LGR', 'LGR-CV', 'SVM', 'RFC'])

#here, we are training our model by defining the function classify.

def classify(X, y):

scaler = MinMaxScaler(feature_range=(0, 1))

X = scaler.fit_transform(X)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.2, random_state = 42, stratify = y)

for key in clf.keys():

clf[key].fit(X_train, y_train)

y_pred = clf[key].predict(X_test)

ac = accuracy_score(y_test, y_pred)

print(key, " ---> ", ac)

%%time classify(dfr_bow, y)

SGD ---> 0.62375 LGR ---> 0.65375 LGR-CV ---> 0.6525 SVM ---> 0.6325 RFC ---> 0.6525 Wall time: 1min 42s

Manual Feature

#passing all the manual features. dfr_feat.head(2)

| word_counts | char_counts | avg_word_len | stop_words_len | hashtags_count | mentions_count | numerics_count | upper_counts | emails_count | urls_flag | |

|---|---|---|---|---|---|---|---|---|---|---|

| 453843 | 15 | 81 | 4.400 | 6 | 0 | 0 | 0 | 0 | 0 | 0 |

| 388280 | 8 | 47 | 4.875 | 4 | 0 | 1 | 0 | 0 | 0 | 0 |

%%time classify(dfr_feat, y)

SGD ---> 0.64125 LGR ---> 0.645 LGR-CV ---> 0.65 SVM ---> 0.6475 RFC ---> 0.5675 Wall time: 1.35 s

Manual + Bow

#passing all the manual features along with bag of words features. X = dfr_feat.join(dfr_bow)

%%time classify(X, y)

SGD ---> 0.64875 LGR ---> 0.67125 LGR-CV ---> 0.66125 SVM ---> 0.64375 RFC ---> 0.705 Wall time: 1min 18s

TFIDF

#passing all the manual features along with tfidf features. from sklearn.feature_extraction.text import TfidfVectorizer

dfr.shape

(4000, 13)

tfidf = TfidfVectorizer() X = tfidf.fit_transform(dfr['twitts'])

%%time classify(pd.DataFrame(X.toarray()), y)

SGD ---> 0.635 LGR ---> 0.65125 LGR-CV ---> 0.6475 SVM ---> 0.63875 RFC ---> 0.6425 Wall time: 1min 37s

Word2Vec

def get_vec(x):

doc = nlp(x)

return doc.vector.reshape(1, -1)

%%time dfr['vec'] = dfr['twitts'].apply(lambda x: get_vec(x))

Wall time: 51.8 s

X = np.concatenate(dfr['vec'].to_numpy(), axis = 0)

X.shape

(4000, 300)

classify(pd.DataFrame(X), y)

SGD ---> 0.5925 LGR ---> 0.70625 LGR-CV ---> 0.69375

C:\Users\Laxmi\AppData\Roaming\Python\Python37\site-packages\sklearn\svm\_base.py:947: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations. "the number of iterations.", ConvergenceWarning)

SVM ---> 0.70125 RFC ---> 0.66625

def predict_w2v(x):

for key in clf.keys():

y_pred = clf[key].predict(get_vec(x))

print(key, "-->", y_pred)

predict_w2v('hi, thanks for watching this video. please like and subscribe')

SGD --> [0] LGR --> [4] LGR-CV --> [0] SVM --> [4] RFC --> [0]

predict_w2v('please let me know if you want more video')

SGD --> [0] LGR --> [0] LGR-CV --> [0] SVM --> [0] RFC --> [0]

predict_w2v('congratulation looking good congrats')

SGD --> [4] LGR --> [4] LGR-CV --> [4] SVM --> [4] RFC --> [0]

Summary

1. In this article, firstly we have cleared the texts like

removing URLsand varioustags.2. Also, we have used various text featurization techniques like

bag-of-words,tf-idfandword2vec.3. After doing text featurization, we building machine learning models on top of those features.

4 Comments