Sentiment Analysis Using Scikit-learn

Sentiment Analysis

Objective

- In this notebook we are going to perform a binary classification i.e. we will classify the sentiment as positive or negative according to the `Reviews’ column data of the IMDB dataset. We will use TFIDF for text data vectorization and Linear Support Vector Machine for classification.

Natural Language Processing (NLP) is a sub-field of artificial intelligence that deals understanding and processing human language. In light of new advancements in machine learning, many organizations have begun applying natural language processing for translation, chatbots and candidate filtering.

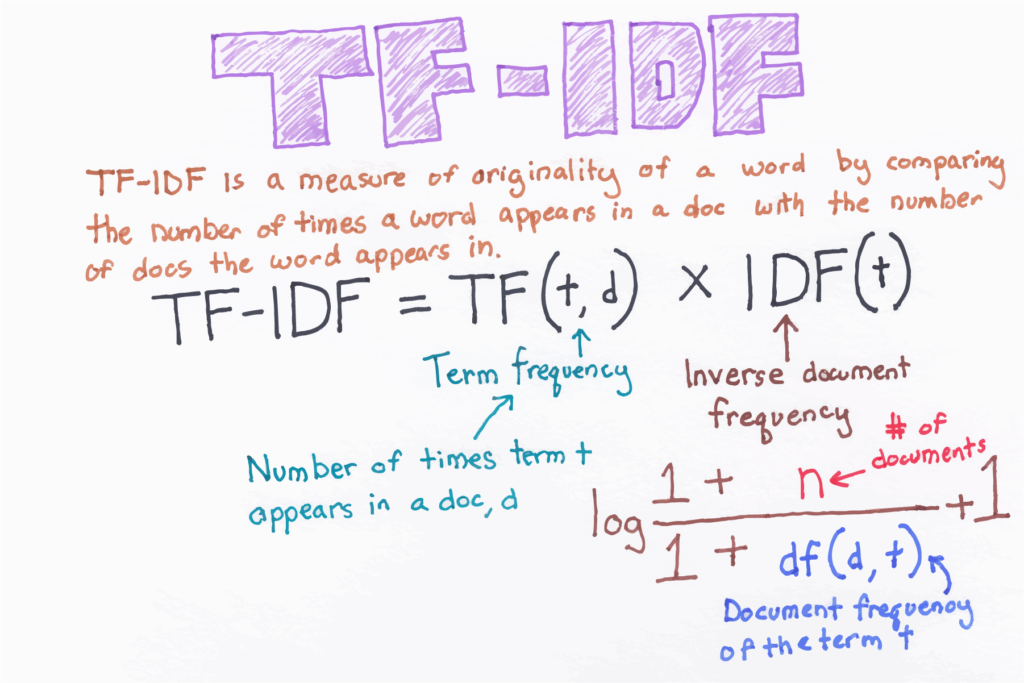

Machine learning algorithms cannot work with raw text directly. Rather, the text must be converted into vectors of numbers. Then we use TF-IDF vectorizer approach. TF-IDF is a technique used for natural language processing, that transforms text to feature vectors that can be used as input to the estimator.

Intro to Pandas

Pandas is a column-oriented data analysis API. It’s a great tool for handling and analyzing input data, and many ML frameworks support pandas data structures as inputs. Although a comprehensive introduction to the pandas API would span many pages, the core concepts are fairly straightforward, and we will present them below. For a more complete reference, the pandas docs site contains extensive documentation and many tutorials.

Intro to Numpy

Numpy is a library for the Python programming language, adding support for large, multi-dimensional arrays and matrices, along with a large collection of high-level mathematical functions to operate on these arrays.

Firstly install the pandas, numpy, scikit-learn library.

!pip install pandas !pip install numpy !pip install scikit-learn

Let’s Get Started

import pandas as pd import numpy as np

- The dataset is available here GITHUB

git clone is a Git command line utility which is used to target an existing repository and create a clone, or copy of the target repository.

!git clone https://github.com/laxmimerit/IMDB-Movie-Reviews-Large-Dataset-50k.git

Cloning into 'IMDB-Movie-Reviews-Large-Dataset-50k'...

Reading an Excel file into a pandas DataFrame

df = pd.read_excel('IMDB-Movie-Reviews-Large-Dataset-50k/train.xlsx')

TF-IDF

Some semantic information is preserved as uncommon words are given more importance than common words in TF-IDF.

E.g. 'She is beautiful', Here 'beautiful will have more importance than 'she' or 'is'.

from sklearn.feature_extraction.text import TfidfVectorizer from sklearn.model_selection import train_test_split from sklearn.svm import LinearSVC from sklearn.metrics import classification_report

# displaying top 5 rows of our dataset df.head()

| Reviews | Sentiment | |

|---|---|---|

| 0 | When I first tuned in on this morning news, I … | neg |

| 1 | Mere thoughts of “Going Overboard” (aka “Babes… | neg |

| 2 | Why does this movie fall WELL below standards?… | neg |

| 3 | Wow and I thought that any Steven Segal movie … | neg |

| 4 | The story is seen before, but that does’n matt… | neg |

!pip install git+https://github.com/laxmimerit/preprocess_kgptalkie.git

Text Preprocessing

In natural language processing (NLP), text preprocessing is the practice of cleaning and preparing text data. NLTK and re are common Python libraries used to handle many text preprocessing tasks.

preprocess_kgptalkie python package is prepared by Kgptalkie

These are some dependencies that you have to install before using this preprocess_kgptalkie package.

!pip install spacy==2.2.3 !python -m spacy download en_core_web_sm !pip install beautifulsoup4==4.9.1 !pip install textblob==0.15.3

Importing preprocess_kgptalkie python package and also regular expression(re).

import preprocess_kgptalkie as ps import re

Defining get_clean function which is taking argument as ‘Reviews’ column then after performing some steps:

"""

Step 1: Lowering the letter then after replacing backward slash from nothing and underscore from space.

Step 2: Remove emails from the Reviews column.

Step 3: Removing html tags from the Reviews column.

Step 4: Removing special character.

Step 5: If you have multiple repeated character then it converted into single character and make meaningful.

E.g. x = 'lllooooovvveeee youuuu'

x = re.sub("(.)\1{2,}", "\1", x)

print(x)

-------

love you

"""def get_clean(x):

x = str(x).lower().replace('\\', '').replace('_', ' ')

x = ps.cont_exp(x)

x = ps.remove_emails(x)

x = ps.remove_urls(x)

x = ps.remove_html_tags(x)

x = ps.remove_accented_chars(x)

x = ps.remove_special_chars(x)

x = re.sub("(.)\\1{2,}", "\\1", x)

return x

df['Reviews'] = df['Reviews'].apply(lambda x: get_clean(x))

df.head()

| Reviews | Sentiment | |

|---|---|---|

| 0 | when i first tuned in on this morning news i t… | neg |

| 1 | mere thoughts of going overboard aka babes aho… | neg |

| 2 | why does this movie fall well below standards … | neg |

| 3 | wow and i thought that any steven segal movie … | neg |

| 4 | the story is seen before but that doesn matter… | neg |

tfidf = TfidfVectorizer(max_features=5000) X = df['Reviews'] y = df['Sentiment'] X = tfidf.fit_transform(X) X

<25000x5000 sparse matrix of type '<class 'numpy.float64'>' with 2843804 stored elements in Compressed Sparse Row format>

Here, splitting the dataset into x and y column having 20% is for testing and 80% for training purposes.

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.2, random_state = 0)

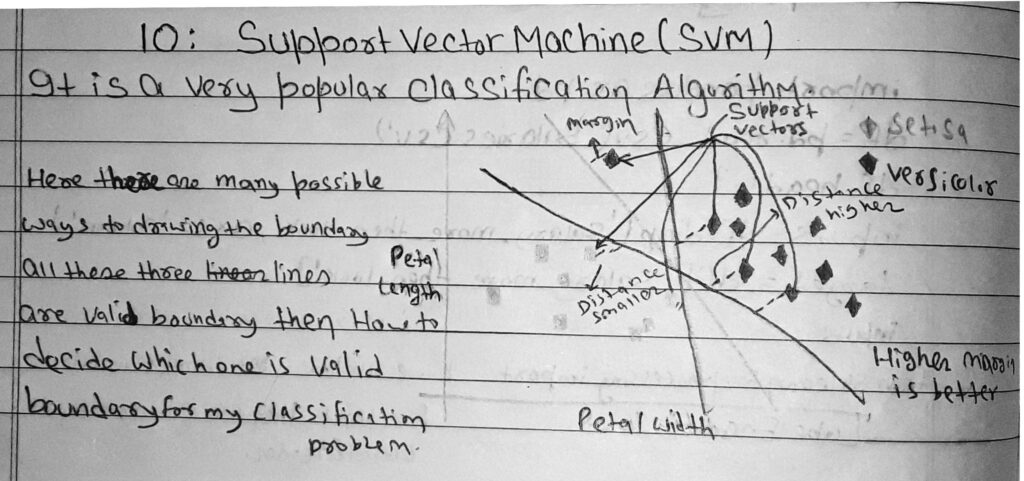

Support Vector Machine

Definition

SVM is a supervised machine learning algorithm that can be used for classification or regression problems. It uses a technique called the kernel trick to transform your data and then based on these transformations it finds an optimal boundary between the possible outputs.

The objective of a Linear SVC (Support Vector Classifier) is to fit the data you provide, returning a “best fit” hyperplane that divides, or categorizes your data. From there, after getting the hyperplane, you can then feed some features to your classifier to see what the “predicted” class is.

clf = LinearSVC() clf.fit(X_train, y_train) y_pred = clf.predict(X_test)

The classification report shows a representation of the main classification metrics on a per-class basis. This gives a deeper intuition of the classifier behavior over global accuracy which can mask functional weaknesses in one class of a multiclass problem.

print(classification_report(y_test, y_pred))

precision recall f1-score support

neg 0.87 0.87 0.87 2480

pos 0.87 0.88 0.88 2520

accuracy 0.87 5000

macro avg 0.87 0.87 0.87 5000

weighted avg 0.87 0.87 0.87 5000

x = 'this movie is really good. thanks a lot for making it' x = get_clean(x) vec = tfidf.transform([x])

vec.shape

(1, 5000)

clf.predict(vec)

array(['pos'], dtype=object)

clf.predict(vec)

array(['pos'], dtype=object)

Python pickle module is used for serializing and de-serializing python object structures. The process to converts any kind of python objects (list, dict, etc.) into byte streams (0s and 1s) is called pickling or serialization or flattening or marshalling. We can convert the byte stream (generated through pickling) back into python objects by a process called as unpickling.

import pickle

pickle.dump(clf, open('model', 'wb'))

pickle.dump(tfidf, open('tfidf', 'wb'))

Conclusions:

- Firstly, We have loaded the IMBD movie reviews dataset using the pandas dataframe.

- Then define get_clean() function and removed unwanted emails, urls, Html tags and special character.

- Convert the text into vectors with the help of the TF-IDF Vectorizer.

- After that use a linear vector machine classifier algorithm.

- We have fit the model on LinearSVC classifier for binary classification and predict the sentiment i.e. positive or negative on real data.

- Lastly, Dump the clf and TF-IDF model with the help of the pickle library. In other words, it’s the process of converting a python object into a byte stream to store it in a file/database, maintain program state across sessions or transport data over the network.

0 Comments