Pandas Crash Course

What is Pandas?

pandas is a fast, powerful, flexible and easy to use open source data analysis and manipulation tool, in Python programming language. It is a high-level data manipulation tool developed by Wes McKinney. It is built on the Numpy package and its key data structure is called the DataFrame. DataFrames allow you to store and manipulate tabular data in rows of observations and columns of variables. Pandas is mainly used for data analysis. Pandas allows importing data from various file formats such as comma-separated values, JSON, SQL, Microsoft Excel. Pandas allows various data manipulation operations such as merging, reshaping, selecting, as well as data cleaning, and data wrangling features.

- DataFrame object for data manipulation with integrated indexing.

- Tools for reading and writing data between in-memory data structures and different file formats.

- Data alignment and integrated handling of missing data.

- Reshaping and pivoting of data sets.

- Label-based slicing, fancy indexing, and subsetting of large data sets.

- Data structure column insertion and deletion.

- Group by engine allowing split-apply-combine operations on data sets.

- Data set merging and joining.

- Hierarchical axis indexing to work with high-dimensional data in a lower-dimensional data structure.

- Time series-functionality: Date range generation and frequency conversion, moving window statistics, moving window linear regressions, date shifting and lagging.

- Provides data filtration.

Dataset

You can download all the datasets used in this notebook from here.

Let's start!

We will first start by importing pandas.

import pandas as pd

We will prepare a python dictionary named data.

data = {

'apple': [3,1,4,5],

'orange': [1, 5, 6, 8]

}

data

{'apple': [3, 1, 4, 5], 'orange': [1, 5, 6, 8]}

type() method returns class type of the argument(object) passed as parameter. dict means that data is a dictionary.

type(data)

dict

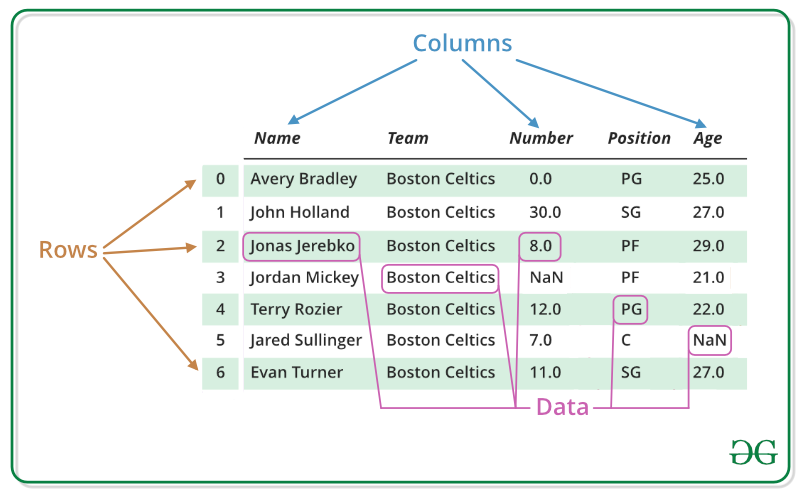

Now we will convert data into a DataFrame. Pandas DataFrame is two-dimensional size-mutable, potentially heterogeneous tabular data structure with labeled axes (rows and columns). A Data frame is a two-dimensional data structure, i.e., data is aligned in a tabular fashion in rows and columns. Pandas DataFrame consists of three principal components, the data, rows, and columns.

df = pd.DataFrame(data) df

| apple | orange | |

|---|---|---|

| 0 | 3 | 1 |

| 1 | 1 | 5 |

| 2 | 4 | 6 |

| 3 | 5 | 8 |

Each column of df is a Series. df['apple'] returns only the column with header 'apple'. If we check the type of this column we can see that its a Series.

df['apple']

0 3 1 1 2 4 3 5 Name: apple, dtype: int64

type(df['apple'])

pandas.core.series.Series

Reading CSV files

Now we will see how to read CSV(Comma Separated Values) files into a pandas dataframe. read_csv() reads a comma-separated values file into DataFrame.

df = pd.read_csv('nba.csv')

head(n) function returns the first n rows for the object based on position. It is useful for quickly testing if your object has the right type of data in it. Below we can see the first 10 rows of df.

df.head(10)

| Name | Team | Number | Position | Age | Height | Weight | College | Salary | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | Avery Bradley | Boston Celtics | 0.0 | PG | 25.0 | 6-2 | 180.0 | Texas | 7730337.0 |

| 1 | Jae Crowder | Boston Celtics | 99.0 | SF | 25.0 | 6-6 | 235.0 | Marquette | 6796117.0 |

| 2 | John Holland | Boston Celtics | 30.0 | SG | 27.0 | 6-5 | 205.0 | Boston University | NaN |

| 3 | R.J. Hunter | Boston Celtics | 28.0 | SG | 22.0 | 6-5 | 185.0 | Georgia State | 1148640.0 |

| 4 | Jonas Jerebko | Boston Celtics | 8.0 | PF | 29.0 | 6-10 | 231.0 | NaN | 5000000.0 |

| 5 | Amir Johnson | Boston Celtics | 90.0 | PF | 29.0 | 6-9 | 240.0 | NaN | 12000000.0 |

| 6 | Jordan Mickey | Boston Celtics | 55.0 | PF | 21.0 | 6-8 | 235.0 | LSU | 1170960.0 |

| 7 | Kelly Olynyk | Boston Celtics | 41.0 | C | 25.0 | 7-0 | 238.0 | Gonzaga | 2165160.0 |

| 8 | Terry Rozier | Boston Celtics | 12.0 | PG | 22.0 | 6-2 | 190.0 | Louisville | 1824360.0 |

| 9 | Marcus Smart | Boston Celtics | 36.0 | PG | 22.0 | 6-4 | 220.0 | Oklahoma State | 3431040.0 |

tail(n) returns last n rows from the object based on position. It is useful for quickly verifying data, for example, after sorting or appending rows.

df.tail(2)

| Name | Team | Number | Position | Age | Height | Weight | College | Salary | |

|---|---|---|---|---|---|---|---|---|---|

| 456 | Jeff Withey | Utah Jazz | 24.0 | C | 26.0 | 7-0 | 231.0 | Kansas | 947276.0 |

| 457 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

Using index_col we can specify the column(s) to use as the row labels of the DataFrame. We can either given as string name or column index. By default the row labels are numbers from 0 to n-1 where n is the total number of rows in the data.

df = pd.read_csv('nba.csv', index_col = 'Name')

df.head()

| Team | Number | Position | Age | Height | Weight | College | Salary | |

|---|---|---|---|---|---|---|---|---|

| Name | ||||||||

| Avery Bradley | Boston Celtics | 0.0 | PG | 25.0 | 6-2 | 180.0 | Texas | 7730337.0 |

| Jae Crowder | Boston Celtics | 99.0 | SF | 25.0 | 6-6 | 235.0 | Marquette | 6796117.0 |

| John Holland | Boston Celtics | 30.0 | SG | 27.0 | 6-5 | 205.0 | Boston University | NaN |

| R.J. Hunter | Boston Celtics | 28.0 | SG | 22.0 | 6-5 | 185.0 | Georgia State | 1148640.0 |

| Jonas Jerebko | Boston Celtics | 8.0 | PF | 29.0 | 6-10 | 231.0 | NaN | 5000000.0 |

Now we will load the IMDB-Movie-Data into df using 'Rank' as the row label.

df = pd.read_csv('IMDB-Movie-Data.csv', index_col = 'Rank')

df.head()

| Title | Genre | Description | Director | Actors | Year | Runtime (Minutes) | Rating | Votes | Revenue (Millions) | Metascore | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Rank | |||||||||||

| 1 | Guardians of the Galaxy | Action,Adventure,Sci-Fi | A group of intergalactic criminals are forced ... | James Gunn | Chris Pratt, Vin Diesel, Bradley Cooper, Zoe S... | 2014 | 121 | 8.1 | 757074 | 333.13 | 76.0 |

| 2 | Prometheus | Adventure,Mystery,Sci-Fi | Following clues to the origin of mankind, a te... | Ridley Scott | Noomi Rapace, Logan Marshall-Green, Michael Fa... | 2012 | 124 | 7.0 | 485820 | 126.46 | 65.0 |

| 3 | Split | Horror,Thriller | Three girls are kidnapped by a man with a diag... | M. Night Shyamalan | James McAvoy, Anya Taylor-Joy, Haley Lu Richar... | 2016 | 117 | 7.3 | 157606 | 138.12 | 62.0 |

| 4 | Sing | Animation,Comedy,Family | In a city of humanoid animals, a hustling thea... | Christophe Lourdelet | Matthew McConaughey,Reese Witherspoon, Seth Ma... | 2016 | 108 | 7.2 | 60545 | 270.32 | 59.0 |

| 5 | Suicide Squad | Action,Adventure,Fantasy | A secret government agency recruits some of th... | David Ayer | Will Smith, Jared Leto, Margot Robbie, Viola D... | 2016 | 123 | 6.2 | 393727 | 325.02 | 40.0 |

df.tail()

| Title | Genre | Description | Director | Actors | Year | Runtime (Minutes) | Rating | Votes | Revenue (Millions) | Metascore | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Rank | |||||||||||

| 996 | Secret in Their Eyes | Crime,Drama,Mystery | A tight-knit team of rising investigators, alo... | Billy Ray | Chiwetel Ejiofor, Nicole Kidman, Julia Roberts... | 2015 | 111 | 6.2 | 27585 | NaN | 45.0 |

| 997 | Hostel: Part II | Horror | Three American college students studying abroa... | Eli Roth | Lauren German, Heather Matarazzo, Bijou Philli... | 2007 | 94 | 5.5 | 73152 | 17.54 | 46.0 |

| 998 | Step Up 2: The Streets | Drama,Music,Romance | Romantic sparks occur between two dance studen... | Jon M. Chu | Robert Hoffman, Briana Evigan, Cassie Ventura,... | 2008 | 98 | 6.2 | 70699 | 58.01 | 50.0 |

| 999 | Search Party | Adventure,Comedy | A pair of friends embark on a mission to reuni... | Scot Armstrong | Adam Pally, T.J. Miller, Thomas Middleditch,Sh... | 2014 | 93 | 5.6 | 4881 | NaN | 22.0 |

| 1000 | Nine Lives | Comedy,Family,Fantasy | A stuffy businessman finds himself trapped ins... | Barry Sonnenfeld | Kevin Spacey, Jennifer Garner, Robbie Amell,Ch... | 2016 | 87 | 5.3 | 12435 | 19.64 | 11.0 |

Now we will print a concise summary of a DataFrame. info() prints information about a DataFrame including the index dtype and columns, non-null values and memory usage.

df.info()

<class 'pandas.core.frame.DataFrame'> Int64Index: 1000 entries, 1 to 1000 Data columns (total 11 columns): Title 1000 non-null object Genre 1000 non-null object Description 1000 non-null object Director 1000 non-null object Actors 1000 non-null object Year 1000 non-null int64 Runtime (Minutes) 1000 non-null int64 Rating 1000 non-null float64 Votes 1000 non-null int64 Revenue (Millions) 872 non-null float64 Metascore 936 non-null float64 dtypes: float64(3), int64(3), object(5) memory usage: 93.8+ KB

shape returns a tuple representing the dimensionality of the DataFrame. It returns (rows,columns) in the dataframe.

df.shape

(1000, 11)

duplicated() return boolean Series denoting duplicate rows. As the sum of all the elements in the series is 0, there are no duplicate rows.

sum(df.duplicated())

0

append() function is used to append rows of other dataframe to the end of the given dataframe, returning a new dataframe object. Below we are appending rows of df to the end of df itself, returning a new object. Hence we can see the number of rows have doubled.

df1 = df.append(df) df1.shape

(2000, 11)

Now if you check the number of duplicated rows you can see that there are 1000 duplicated rows.

df1.duplicated().sum()

1000

drop_duplicates() removes the duplicates rows and returns DataFrame.

df2 = df1.drop_duplicates() df2.shape

(1000, 11)

df1.shape

(2000, 11)

Using inplace we can specify whether to drop duplicates in place or to return a copy.

df1.drop_duplicates(inplace = True) df1.shape

(1000, 11)

Column Cleanup

df.columns gives the column labels of the DataFrame.

df.columns

Index(['Title', 'Genre', 'Description', 'Director', 'Actors', 'Year',

'Runtime (Minutes)', 'Rating', 'Votes', 'Revenue (Millions)',

'Metascore'],

dtype='object')

len() gives the length. There are 11 columns in df.

len(df.columns)

11

To generate a descriptive statistics we can use describe(). Descriptive statistics include those that summarize the central tendency, dispersion and shape of a dataset’s distribution, excluding NaN values.

df.describe()

| Year | Runtime (Minutes) | Rating | Votes | Revenue (Millions) | Metascore | |

|---|---|---|---|---|---|---|

| count | 1000.000000 | 1000.000000 | 1000.000000 | 1.000000e+03 | 872.000000 | 936.000000 |

| mean | 2012.783000 | 113.172000 | 6.723200 | 1.698083e+05 | 82.956376 | 58.985043 |

| std | 3.205962 | 18.810908 | 0.945429 | 1.887626e+05 | 103.253540 | 17.194757 |

| min | 2006.000000 | 66.000000 | 1.900000 | 6.100000e+01 | 0.000000 | 11.000000 |

| 25% | 2010.000000 | 100.000000 | 6.200000 | 3.630900e+04 | 13.270000 | 47.000000 |

| 50% | 2014.000000 | 111.000000 | 6.800000 | 1.107990e+05 | 47.985000 | 59.500000 |

| 75% | 2016.000000 | 123.000000 | 7.400000 | 2.399098e+05 | 113.715000 | 72.000000 |

| max | 2016.000000 | 191.000000 | 9.000000 | 1.791916e+06 | 936.630000 | 100.000000 |

Now we will make a list of the column names.

col = df.columns type(list(col))

list

col

Index(['Title', 'Genre', 'Description', 'Director', 'Actors', 'Year',

'Runtime (Minutes)', 'Rating', 'Votes', 'Revenue (Millions)',

'Metascore'],

dtype='object')

We can rename the columns by assigning a list of new names to df.columns.

col1 = ['a', 'b', 'c', 'd', 'e', 'f', 'g', 'h', 'i', 'j', 'k'] df.columns = col1 df.head()

| a | b | c | d | e | f | g | h | i | j | k | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Rank | |||||||||||

| 1 | Guardians of the Galaxy | Action,Adventure,Sci-Fi | A group of intergalactic criminals are forced ... | James Gunn | Chris Pratt, Vin Diesel, Bradley Cooper, Zoe S... | 2014 | 121 | 8.1 | 757074 | 333.13 | 76.0 |

| 2 | Prometheus | Adventure,Mystery,Sci-Fi | Following clues to the origin of mankind, a te... | Ridley Scott | Noomi Rapace, Logan Marshall-Green, Michael Fa... | 2012 | 124 | 7.0 | 485820 | 126.46 | 65.0 |

| 3 | Split | Horror,Thriller | Three girls are kidnapped by a man with a diag... | M. Night Shyamalan | James McAvoy, Anya Taylor-Joy, Haley Lu Richar... | 2016 | 117 | 7.3 | 157606 | 138.12 | 62.0 |

| 4 | Sing | Animation,Comedy,Family | In a city of humanoid animals, a hustling thea... | Christophe Lourdelet | Matthew McConaughey,Reese Witherspoon, Seth Ma... | 2016 | 108 | 7.2 | 60545 | 270.32 | 59.0 |

| 5 | Suicide Squad | Action,Adventure,Fantasy | A secret government agency recruits some of th... | David Ayer | Will Smith, Jared Leto, Margot Robbie, Viola D... | 2016 | 123 | 6.2 | 393727 | 325.02 | 40.0 |

Now we will again change and use the original names.

df.columns = col df.head(0)

| Title | Genre | Description | Director | Actors | Year | Runtime (Minutes) | Rating | Votes | Revenue (Millions) | Metascore | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Rank |

We can even rename the columns using the rename() function. We have passed a dictionary as the parameter which specifies the old name:new name as the key-value pair.

df.rename(columns={

'Runtime (Minutes)': 'Runtime',

'Revenue (Millions)': 'Revenue'

}, inplace= True)

df.columns

Index(['Title', 'Genre', 'Description', 'Director', 'Actors', 'Year',

'Runtime', 'Rating', 'Votes', 'Revenue', 'Metascore'],

dtype='object')

You can compare the previous names displayed below and the new names displayed above.

col

Index(['Title', 'Genre', 'Description', 'Director', 'Actors', 'Year',

'Runtime (Minutes)', 'Rating', 'Votes', 'Revenue (Millions)',

'Metascore'],

dtype='object')

Missing values

Now we will see how to handle missing values(nan). For that we will import numpy.

import numpy as np

nan is a numpy constant which represents Not a Number (NaN).

np.nan

nan

isnull() function detect missing values in the given series object. It return a boolean same-sized object indicating if the values are NA. Missing values gets mapped to True and non-missing value gets mapped to False.

df.isnull().head(10)

| Title | Genre | Description | Director | Actors | Year | Runtime | Rating | Votes | Revenue | Metascore | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Rank | |||||||||||

| 1 | False | False | False | False | False | False | False | False | False | False | False |

| 2 | False | False | False | False | False | False | False | False | False | False | False |

| 3 | False | False | False | False | False | False | False | False | False | False | False |

| 4 | False | False | False | False | False | False | False | False | False | False | False |

| 5 | False | False | False | False | False | False | False | False | False | False | False |

| 6 | False | False | False | False | False | False | False | False | False | False | False |

| 7 | False | False | False | False | False | False | False | False | False | False | False |

| 8 | False | False | False | False | False | False | False | False | False | True | False |

| 9 | False | False | False | False | False | False | False | False | False | False | False |

| 10 | False | False | False | False | False | False | False | False | False | False | False |

To get a better picture we can sum the above result. Now we can see that Revenue has 128 null values and Metascore has 64 null values.

df.isnull().sum()

Title 0 Genre 0 Description 0 Director 0 Actors 0 Year 0 Runtime 0 Rating 0 Votes 0 Revenue 128 Metascore 64 dtype: int64

Alternatively, we can even use isna(). It returns a boolean same-sized object indicating if the values are NA. NA values, such as None or numpy.NaN, gets mapped to True values. Everything else gets mapped to False values.

df.isna().sum()

Title 0 Genre 0 Description 0 Director 0 Actors 0 Year 0 Runtime 0 Rating 0 Votes 0 Revenue 128 Metascore 64 dtype: int64

dropna() removes the rows which contain missing values. We can see that 162 rows are removed.

df1 = df.dropna() df1.shape

(838, 11)

If we specify axis = 1, columns which contain missing values are dropped.

df2 = df.dropna(axis = 1) df2.head(3)

| Title | Genre | Description | Director | Actors | Year | Runtime | Rating | Votes | |

|---|---|---|---|---|---|---|---|---|---|

| Rank | |||||||||

| 1 | Guardians of the Galaxy | Action,Adventure,Sci-Fi | A group of intergalactic criminals are forced ... | James Gunn | Chris Pratt, Vin Diesel, Bradley Cooper, Zoe S... | 2014 | 121 | 8.1 | 757074 |

| 2 | Prometheus | Adventure,Mystery,Sci-Fi | Following clues to the origin of mankind, a te... | Ridley Scott | Noomi Rapace, Logan Marshall-Green, Michael Fa... | 2012 | 124 | 7.0 | 485820 |

| 3 | Split | Horror,Thriller | Three girls are kidnapped by a man with a diag... | M. Night Shyamalan | James McAvoy, Anya Taylor-Joy, Haley Lu Richar... | 2016 | 117 | 7.3 | 157606 |

Imputation

Imputation is the process of replacing missing data with substituted values.

fillna(0) fills NA/NaN values with 0. You can see that all the nan values are replaced by 0.

df3 = df.fillna(0) df3.isna().sum()

Title 0 Genre 0 Description 0 Director 0 Actors 0 Year 0 Runtime 0 Rating 0 Votes 0 Revenue 0 Metascore 0 dtype: int64

df has 128 null values in Revenue and 64 null values in Metascore

df.isnull().sum()

Title 0 Genre 0 Description 0 Director 0 Actors 0 Year 0 Runtime 0 Rating 0 Votes 0 Revenue 128 Metascore 64 dtype: int64

revenue is a Series which contains the values of the column Revenue.

revenue = df['Revenue'] type(revenue)

pandas.core.series.Series

It has some NaN values.

revenue.tail()

Rank 996 NaN 997 17.54 998 58.01 999 NaN 1000 19.64 Name: Revenue, dtype: float64

mean() returns the mean of the values for the requested axis. Here we will get the mean of the values in revenue.

revenue_mean = revenue.mean() revenue_mean

82.95637614678897

Now we will fill the missing values with the mean.

revenue.fillna(revenue_mean, inplace= True) revenue.tail()

Rank 996 82.956376 997 17.540000 998 58.010000 999 82.956376 1000 19.640000 Name: Revenue, dtype: float64

We can see that now there are no null values.

revenue.isnull().sum()

0

We will modify df by adding the new revenue values. Now we can see that Revenue doesn't have any null values but Metascore has null values.

df['Revenue'] = revenue df.isnull().sum()

Title 0 Genre 0 Description 0 Director 0 Actors 0 Year 0 Runtime 0 Rating 0 Votes 0 Revenue 0 Metascore 64 dtype: int64

In the same way we will replace the null values in Metascore by the mean of the values in Metascore.

metascore = df['Metascore']

metascore_mean = metascore.mean()

print("metascore_mean =",metascore_mean)

metascore.fillna(metascore_mean, inplace = True)

df['Metascore'] = metascore

df.isnull().sum()

metascore_mean = 58.98504273504273

Title 0 Genre 0 Description 0 Director 0 Actors 0 Year 0 Runtime 0 Rating 0 Votes 0 Revenue 0 Metascore 0 dtype: int64

describe() gives statistical data of the numerical columns only.

df.describe()

| Year | Runtime | Rating | Votes | Revenue | Metascore | |

|---|---|---|---|---|---|---|

| count | 1000.000000 | 1000.000000 | 1000.000000 | 1.000000e+03 | 1000.000000 | 1000.000000 |

| mean | 2012.783000 | 113.172000 | 6.723200 | 1.698083e+05 | 82.956376 | 58.985043 |

| std | 3.205962 | 18.810908 | 0.945429 | 1.887626e+05 | 96.412043 | 16.634858 |

| min | 2006.000000 | 66.000000 | 1.900000 | 6.100000e+01 | 0.000000 | 11.000000 |

| 25% | 2010.000000 | 100.000000 | 6.200000 | 3.630900e+04 | 17.442500 | 47.750000 |

| 50% | 2014.000000 | 111.000000 | 6.800000 | 1.107990e+05 | 60.375000 | 58.985043 |

| 75% | 2016.000000 | 123.000000 | 7.400000 | 2.399098e+05 | 99.177500 | 71.000000 |

| max | 2016.000000 | 191.000000 | 9.000000 | 1.791916e+06 | 936.630000 | 100.000000 |

info() gives information about all the columns.

df.info()

<class 'pandas.core.frame.DataFrame'> Int64Index: 1000 entries, 1 to 1000 Data columns (total 11 columns): Title 1000 non-null object Genre 1000 non-null object Description 1000 non-null object Director 1000 non-null object Actors 1000 non-null object Year 1000 non-null int64 Runtime 1000 non-null int64 Rating 1000 non-null float64 Votes 1000 non-null int64 Revenue 1000 non-null float64 Metascore 1000 non-null float64 dtypes: float64(3), int64(3), object(5) memory usage: 93.8+ KB

We can even get the describtion about a specific column.

df['Genre'].describe()

count 1000 unique 207 top Action,Adventure,Sci-Fi freq 50 Name: Genre, dtype: object

value_counts() returns a Series containing counts of unique values. The resulting object will be in descending order so that the first element is the most frequently-occurring element. Below we have displayed the counts of the first 10 unique values in Genre column.

df['Genre'].value_counts().head(10)

Action,Adventure,Sci-Fi 50 Drama 48 Comedy,Drama,Romance 35 Comedy 32 Drama,Romance 31 Comedy,Drama 27 Action,Adventure,Fantasy 27 Animation,Adventure,Comedy 27 Comedy,Romance 26 Crime,Drama,Thriller 24 Name: Genre, dtype: int64

unique() returns all the unique values in order of appearance. len() is used to get the total number of unique values in Genre which is 207.

len(df['Genre'].unique())

207

Corr method

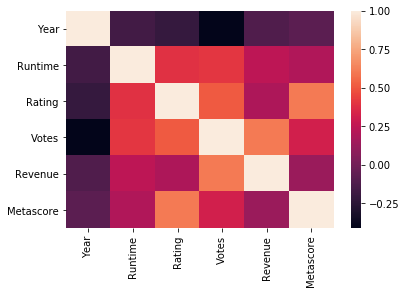

corr() is used to compute pairwise correlation of columns, excluding NA/null values. For any non-numeric data type columns in the dataframe it is ignored.

corrmat = df.corr() corrmat

| Year | Runtime | Rating | Votes | Revenue | Metascore | |

|---|---|---|---|---|---|---|

| Year | 1.000000 | -0.164900 | -0.211219 | -0.411904 | -0.117562 | -0.076077 |

| Runtime | -0.164900 | 1.000000 | 0.392214 | 0.407062 | 0.247834 | 0.202239 |

| Rating | -0.211219 | 0.392214 | 1.000000 | 0.511537 | 0.189527 | 0.604723 |

| Votes | -0.411904 | 0.407062 | 0.511537 | 1.000000 | 0.607941 | 0.318116 |

| Revenue | -0.117562 | 0.247834 | 0.189527 | 0.607941 | 1.000000 | 0.132304 |

| Metascore | -0.076077 | 0.202239 | 0.604723 | 0.318116 | 0.132304 | 1.000000 |

Now we will visualize the correlation. For that we have imported seaborn library.

import seaborn as sns

heatmap() plots rectangular data as a color-encoded matrix. A heatmap is a two-dimensional graphical representation of data where the individual values that are contained in a matrix are represented as colors.

sns.heatmap(corrmat)

Now we will draw plots using matplotlib. For that we will import pyplot from matplotlib.

import matplotlib.pyplot as plt

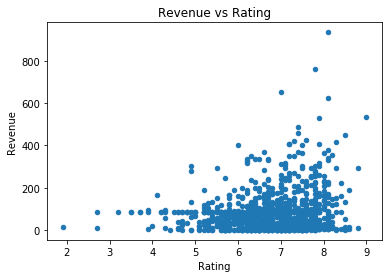

plot() makes plots of Series or DataFrame. Here we are making a scatter plot of Rating versus Revenue

df.plot(kind = 'scatter', x = 'Rating', y = 'Revenue', title = 'Revenue vs Rating')

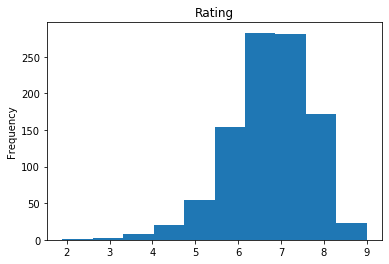

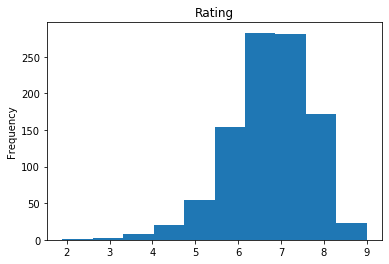

Now we will plot a histogram of Rating.

df['Rating'].plot(kind = 'hist', title = 'Rating')

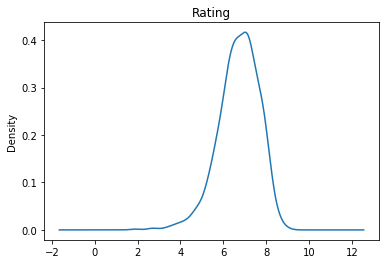

Here we have drawn a Kernel Density Estimation plot of Rating.

df['Rating'].plot(kind = 'kde', title = 'Rating')

The above plots shows us the distribution of values in Rating. Most of the ratings are disrtibuted between 6 and 8.

df['Rating'].value_counts()

7.1 52 6.7 48 7.0 46 6.3 44 6.6 42 7.2 42 7.3 42 6.5 40 7.8 40 6.2 37 6.8 37 7.5 35 6.4 35 7.4 33 6.9 31 6.1 31 7.6 27 7.7 27 5.8 26 6.0 26 8.1 26 7.9 23 5.7 21 8.0 19 5.9 19 5.6 17 5.5 14 5.3 12 5.4 12 5.2 11 8.2 10 4.9 7 8.3 7 4.7 6 8.5 6 4.6 5 5.1 5 5.0 4 4.8 4 4.3 4 8.4 4 3.9 3 8.6 3 8.8 2 2.7 2 4.2 2 3.5 2 3.7 2 9.0 1 3.2 1 4.0 1 4.5 1 4.4 1 4.1 1 1.9 1 Name: Rating, dtype: int64

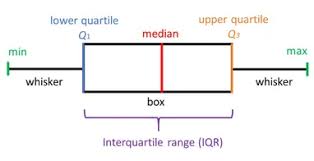

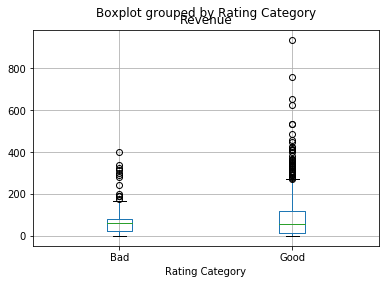

Now we will plot a box plot. Box plots show the five-number summary of a set of data: including the minimum, first (lower) quartile, median, third (upper) quartile, and maximum. Box plots divide the data into sections that each contain approximately 25% of the data in that set. The first quartile is the 25th percentile. Second quartile is 50th percentile and third quartile is 75th percentile.

The points outside the whiskers of the boxplot are called outliers. These "too far away" points are called "outliers", because they "lie outside" the range in which we expect them.

df['Rating'].plot(kind = 'box')

You can compare the values below and the values shown by the boxplot.

df['Rating'].describe()

count 1000.000000 mean 6.723200 std 0.945429 min 1.900000 25% 6.200000 50% 6.800000 75% 7.400000 max 9.000000 Name: Rating, dtype: float64

Now we will add a new column Rating Category to df. If the Rating is more than 6.2 then Rating Category will be good or it will be bad.

rating_cat = []

for rate in df['Rating']:

if rate > 6.2:

rating_cat.append('Good')

else:

rating_cat.append('Bad')

rating_cat[:20]

['Good', 'Good', 'Good', 'Good', 'Bad', 'Bad', 'Good', 'Good', 'Good', 'Good', 'Good', 'Good', 'Good', 'Good', 'Good', 'Good', 'Good', 'Good', 'Good', 'Good']

df['Rating Category'] = rating_cat df.head()

| Title | Genre | Description | Director | Actors | Year | Runtime | Rating | Votes | Revenue | Metascore | Rating Category | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Rank | ||||||||||||

| 1 | Guardians of the Galaxy | Action,Adventure,Sci-Fi | A group of intergalactic criminals are forced ... | James Gunn | Chris Pratt, Vin Diesel, Bradley Cooper, Zoe S... | 2014 | 121 | 8.1 | 757074 | 333.13 | 76.0 | Good |

| 2 | Prometheus | Adventure,Mystery,Sci-Fi | Following clues to the origin of mankind, a te... | Ridley Scott | Noomi Rapace, Logan Marshall-Green, Michael Fa... | 2012 | 124 | 7.0 | 485820 | 126.46 | 65.0 | Good |

| 3 | Split | Horror,Thriller | Three girls are kidnapped by a man with a diag... | M. Night Shyamalan | James McAvoy, Anya Taylor-Joy, Haley Lu Richar... | 2016 | 117 | 7.3 | 157606 | 138.12 | 62.0 | Good |

| 4 | Sing | Animation,Comedy,Family | In a city of humanoid animals, a hustling thea... | Christophe Lourdelet | Matthew McConaughey,Reese Witherspoon, Seth Ma... | 2016 | 108 | 7.2 | 60545 | 270.32 | 59.0 | Good |

| 5 | Suicide Squad | Action,Adventure,Fantasy | A secret government agency recruits some of th... | David Ayer | Will Smith, Jared Leto, Margot Robbie, Viola D... | 2016 | 123 | 6.2 | 393727 | 325.02 | 40.0 | Bad |

We can specify a column in the DataFrame to pandas.DataFrame.groupby() using by. One box-plot will be done per value of columns in by. Hence we have got 2 boxplots separately for good and bad.

df.boxplot(column= 'Revenue', by = 'Rating Category')

0 Comments